What Is Caching: Benefits, Use Cases, and Implementation

By Alex Carter on November 1, 2024

Efficient data access is crucial for improving performance in applications, databases, and web services. High traffic, frequent queries, and large datasets can all result in system slowdowns and cost increases. Caching addresses these issues by speeding up data retrieval, reducing database load, and ensuring consistent response times. It is crucial for accelerating processes in a wide range of technologies, including content delivery networks and microservices.

What Is Caching?

Caching is a technique used to store frequently accessed data in a high-speed storage layer for quicker retrieval. Instead of repeatedly fetching information from slower primary storage, caching keeps commonly used data readily available, improving system performance and efficiency. This method reduces load times, minimizes server requests, and enhances the overall user experience across applications, databases, and web services.

How Does Caching Work?

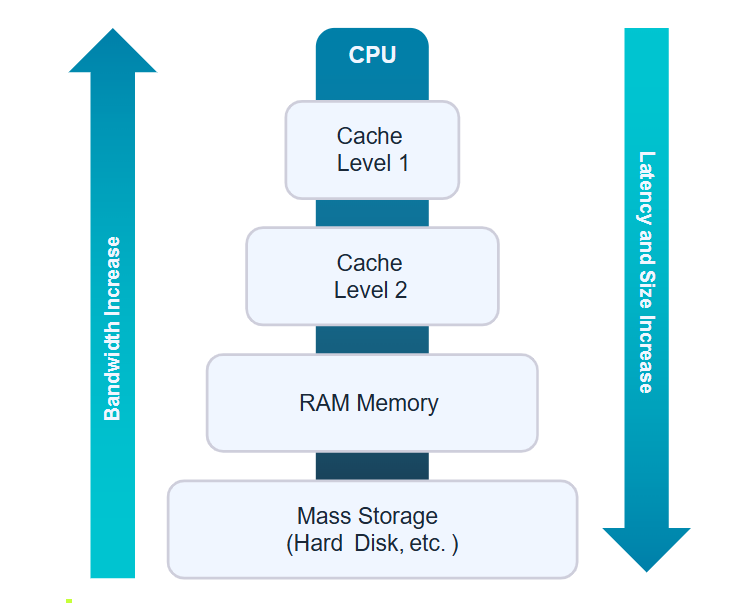

Cache data is commonly stored in fast-access memory (RAM) and can be combined with software components to improve speed. Caching improves data retrieval by reducing dependency on sluggish storage systems.

However, when working with databases, it’s essential to ensure efficient data retrieval methods, such as using MySQL connect to database techniques, to maintain smooth integration between cached and stored data.

Benefits of Caching in System Performance

- Improved Data Access: Storing frequently used data in RAM enables faster retrieval than disk-based storage, resulting in better program responsiveness;

- Reduced Database Expenses: Using high IOPS caching lowers the need for repetitive database calls, cutting operational expenses;

- Consistent Performance: Caching reduces the impact of heavy traffic on databases, ensuring stable performance during peak usage;

- Less Database Strain: Frequently requested data is cached, lowering the workload on primary storage and improving efficiency;

- Increased Read Throughput: Distributed caching efficiently handles high request volumes, improving scalability and system reliability.

Different Types of Caches in HTTP

CPU Cache

A small memory unit built inside a computer’s central processor unit (CPU) holds frequently used data and instructions. This cache reduces the need for the CPU to access slower main memory or storage, hence increasing processing performance.

Memory Cache

A designated section of RAM temporarily holds frequently accessed data, reducing delays when retrieving information from slower storage devices like hard drives or network resources. This improves application performance and responsiveness.

Disk Cache

A portion of RAM stores previously read or written disk data, hence improving read/write operations on hard disk drives (HDDs) or solid-state drives (SSDs). This caching approach improves overall system efficiency by reducing direct disk accesses.

Browser Cache

Web browsers store copies of web content, such as HTML pages, images, and media files, in temporary storage. This allows faster page loading when revisiting websites, as content is retrieved from the cache instead of being downloaded again.

Distributed Cache

A network-wide caching system stores frequently accessed data across multiple servers. By reducing redundant data requests, distributed caching improves system scalability and allows more users to access data with lower latency.

Common Use Cases for Caching

Mobile Applications

As mobile app usage continues to rise, caching plays a vital role in maintaining performance. It speeds up load times, enhances user experience, and reduces the strain on backend systems. By keeping frequently used data in memory, apps can support more users efficiently while controlling operational costs.

Content Delivery Networks (CDNs)

Managing internet traffic across many areas may be expensive, especially when technology is duplicated globally. A CDN lowers these expenses by storing material such as movies, pictures, and websites on a network of edge servers. This enables users to retrieve cached material from a nearby location, lowering load times, boosting data transmission rates, and lessening the pressure on the original servers.

DNS Caching

When a domain is accessed, DNS servers translate it into an IP address. Caching these lookups at multiple levels—including on operating systems, ISPs, and dedicated DNS servers—reduces the time needed to resolve domain names and improves network performance.

Session Management

Web applications employ session data, such as login information and shopping cart contents, to track user behavior. Using a centralized session cache guarantees that this data is available across numerous servers, resulting in a more consistent experience, improved speed, and better management of large traffic demands.

API Caching

Modern applications often use APIs to serve data to users. Instead of processing every request through backend logic or databases, caching API responses improves efficiency. For instance, if product listings are updated only once a day, caching the API response prevents redundant queries, reducing server load and improving response times.

Caching in Hybrid Environments

Hybrid cloud solutions commonly include cloud-based apps that query on-premises databases. While connection options such as VPNs or dedicated lines can minimize latency, caching data in the cloud improves speed by reducing retrieval times and optimizing bandwidth utilization.

Web Caching

Caching online material such as photos, HTML texts, and videos lowers latency and server burden. Server-side caching uses proxy servers to save replies from web applications, but client-side caching, such as browser caching, caches previously viewed material locally, resulting in quicker page loads.

General Caching

Accessing data from memory is much faster than from disk. For applications that don’t need full database functionality, an in-memory key-value store offers a high-performance solution. It’s ideal for frequently accessed data like product categories, user profiles, and reference lists, reducing database load while keeping response times fast.

Integrated Caching

An integrated cache automatically saves frequently requested data from a database in memory, lowering query times and increasing database performance. Because the cached data stays synced with the source database, this strategy improves efficiency while preserving data consistency.

Limitations of Basic Caching and Its Impact on Data Processing

Lack of Context in Cached Data

Caching speeds up data retrieval but does not provide insight into the stored information. It only serves as a temporary storage layer, delivering raw data without context, relationships, or additional details.

Challenges in Distributed Data Systems

Data is often spread across multiple backend systems, making it difficult to gain a unified view. Caching alone does not bridge the gap between different data sources, limiting its usefulness for comprehensive analysis.

Real-Time Insights Beyond Caching

Caching provides rapid access to stored data, but it does not facilitate real-time event tracking. Without a real-time processing layer, useful insights may be delayed, lowering the efficacy of time-critical judgments.

Limitations in Data Processing and Analysis

Caches store and retrieve data efficiently but do not manipulate or analyze it. Businesses relying solely on caching may miss opportunities to extract actionable insights due to delays in processing data from multiple sources.

Fragmented Data Across Systems

Basic caching can result in inconsistent and unstructured data retrieval, especially when pulling information from different systems. Without a standardized approach, integrating and presenting data remains a challenge.

Integrating Siloed Data for Better Insights

To maximize data value, organizations want solutions that integrate and consolidate disparate information. Linking diverse sources enables more accurate analysis and comprehension of complicated information.

Conclusion

Caching is an effective way to improve system performance, reduce database load, and manage high traffic efficiently. It enables faster data retrieval, lowers infrastructure costs, and ensures stable response times across applications. From web caching to database acceleration, caching supports various use cases that optimize operations in both small-scale applications and large distributed systems. Implementing the right caching strategy helps maintain performance and scalability as data demands grow.

Posted in blog, Web Applications

Alex Carter

Alex Carter is a cybersecurity enthusiast and tech writer with a passion for online privacy, website performance, and digital security. With years of experience in web monitoring and threat prevention, Alex simplifies complex topics to help businesses and developers safeguard their online presence. When not exploring the latest in cybersecurity, Alex enjoys testing new tech tools and sharing insights on best practices for a secure web.