What Is Screen Scraping? Everything You Need to Know

By Alex Carter on September 23, 2024

Screen scraping is a technique for extracting and repurposing data presented on a screen. It enables organizations and developers to automate data collecting, move information between apps, and rapidly evaluate on-screen content. While commonly used for legitimate purposes, such as integrating data from outdated systems into modern applications, it can also raise concerns when applied for unauthorized data extraction.

Understanding Screen Scraping

Screen scraping is a technique used to extract and repurpose data displayed on a screen. It is often employed to transfer information between applications, automate data collection, or, in some cases, for unauthorized data extraction.

This approach extracts onscreen content—such as text, graphics, or charts—from a desktop, program, or website and transforms it into readable plain text. Screen scraping is faster and more efficient than human data collecting since it automates the identification and extraction of information.

Screen scraping can be done automatically using specialized software or manually by an individual copying data. Scraping programs analyze the user interface (UI), recognize elements, and extract the relevant data. If the content includes images, optical character recognition (OCR) technology is used to convert visual data into text.

Screen scraping may be used in both acceptable and unethical ways. Transferring data from old systems to newer applications is a typical ethical usage. However, it may also be abused—for example, by scraping private data without authorization or copying program code for illegal use.

While screen scraping may be an effective technique for data automation, it must be used in accordance with legal and ethical guidelines to minimize privacy infractions and intellectual property problems.

How Screen Scraping Works

Screen scraping can be performed in different ways, depending on the purpose. For example, if someone has direct access to an application’s source code, they might copy and reuse it in another program using Java.

In general, screen scraping extracts onscreen data from a specific user interface (UI) element. Whether it’s a desktop application, web page, or software interface, the scraper identifies visible elements and captures the data. If images are involved, optical character recognition (OCR) is used to convert visual content into text. The extracted data can be unformatted or structured, preserving its original layout when necessary.

Various tools can facilitate screen scraping. Selenium and PhantomJS allow users to extract data from HTML within a browser, while Unix shell scripts serve as lightweight alternatives for simpler scraping tasks.

In banking, third-party providers (TPPs) may request login credentials from users to access financial transaction data through online banking portals. Budgeting apps, for example, use this method to retrieve details on incoming and outgoing transactions across multiple accounts.

When transferring data from legacy systems, a screen scraper reformats older terminal-based data to be compatible with modern interfaces, such as web browsers or updated operating systems. It also converts user inputs from new systems to function as if they were interacting with the original legacy software.

Common Uses of Screen Scraping

Screen scraping serves a variety of purposes, ranging from legitimate data collection to more controversial applications. These use cases generally fall into two categories:

- Scraping sensitive data – Requires users to share login credentials, allowing a third party to access and extract private information (also known as credential sharing);

- Scraping publicly available data – Gathers openly accessible information for purposes like price comparisons, ad verification, and modernizing legacy systems.

Examples of Credential-Based Screen Scraping

- Accessing and analyzing bank accounts – Financial services use screen scraping to log into user accounts, retrieve transaction data, and provide insights outside the banking app;

- Initiating payments – Some providers use screen scraping to facilitate transactions, such as moving funds between accounts for better interest rates in budgeting apps;

- Conducting affordability checks – Lenders and financial institutions may use screen scraping to quickly review a borrower’s income and spending before approving a loan;

- Storing financial data – Some organizations gather and save user account information over time in order to create a more complete financial picture;

- Unauthorized data extraction – Although most screen scraping is done with the user’s permission, hackers can utilize it to acquire sensitive information from unwary users.

While screen scraping can be useful for financial management and automation, it also raises concerns around privacy, security, and data control, particularly when login credentials are involved.

Ways to Deter Screen Scraping

There’s no guaranteed way to stop unethical screen scraping entirely, but businesses can make it harder and detect suspicious activity. Signs of scraping attempts include unusual user agents, disabled JavaScript, or a high number of repetitive requests in a short period. Implementing security measures can help minimize the risk.

Methods to Reduce Screen Scraping Risks:

- Require login credentials – While this won’t stop scraping, it helps track and identify users performing it;

- Set rate limits – Restricting the number of requests from a single IP in a short time can slow down automated scraping attempts;

- Use CAPTCHAs – These challenges make it harder for bots to scrape data by requiring human verification;

- Deploy web application firewalls (WAFs) – A WAF can detect and block scraping attempts based on behavioral patterns;

- Run fraud detection tools – Software that monitors for unusual access patterns can help identify scrapers in real time;

- Render content as images – Converting key content to images can make it harder for scrapers that rely on text extraction, though it may also impact SEO and accessibility.

While these measures can help deter screen scraping, they aren’t foolproof. Businesses must balance security with user experience, ensuring that protective measures don’t inconvenience legitimate users or harm search engine visibility.

Key Advantages of Screen Scraping

Screen scraping offers several benefits that make data collection more efficient and scalable.

- Speed – Automates data extraction, significantly reducing the time needed compared to manual collection;

- Scalability – Can process and retrieve large amounts of data quickly;

- Accuracy – Reduces human errors that often occur in repetitive data entry tasks;

- Customization – Can be tailored to extract specific types of information based on individual needs;

- Structured Data Output – Converts extracted data into a machine-readable format, making it easier to use in other applications;

- Efficient Integration – Works with existing systems, such as CRM platforms, allowing for automated data updates and improved workflow efficiency.

Limitations and Risks of Screen Scraping

While screen scraping offers efficiency, it also comes with challenges that need to be considered.

- Ongoing Maintenance – Websites frequently update their structure, which can break scraping tools, requiring adjustments and regular upkeep;

- No Built-in Data Analysis – Screen scraping extracts data but doesn’t interpret it. Additional processing is needed to generate insights or use the data effectively;

- Potential for Misuse – While it has legitimate applications, screen scraping can also be exploited for unauthorized data extraction or content theft.

Popular Screen Scraping Tools

For those looking to automate screen scraping instead of manually extracting data, various tools can simplify the process:

UiPath – A robotic process automation (RPA) tool that captures bitmap data, supports full-text scraping, and uses OCR for extracting on-screen information.

FMiner – A Windows and macOS tool that combines screen scraping, web data extraction, and crawling for efficient data collection.

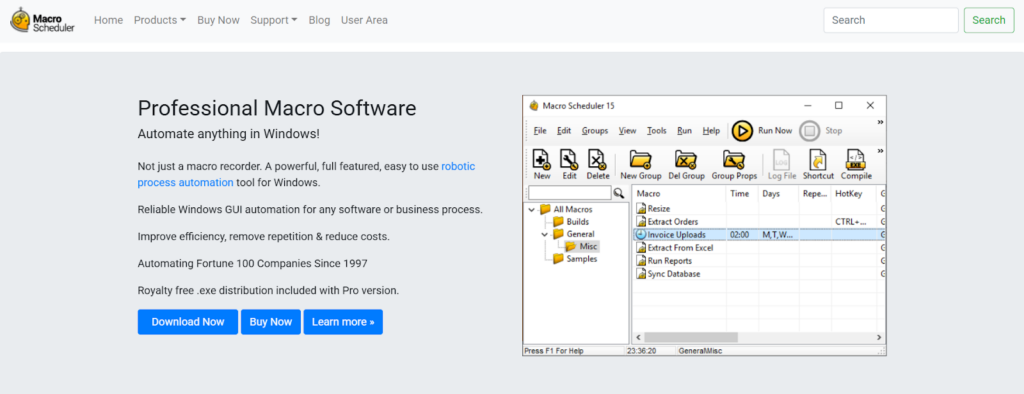

Macro Scheduler – Allows users to automate software processes on Windows by creating macros and scripts for screen scraping, including OCR-based extraction.

ScreenScraper Studio – Lets users define what to extract and generate scraping code in C++, C#, Visual Basic 6.0, and JavaScript for integration into applications.

Existek – Offers screen scraping automation for desktop apps, featuring OCR, API interception, plugins, and browser extensions to streamline data extraction.

Diffbot – An AI-powered data scraping tool that extracts text, videos, and images, formatting the data into JSON or CSV for easy processing.

These solutions offer a variety of automation and customization options, making screen scraping more effective for businesses and developers.

Screen Scraping vs. Web Scraping: Key Differences

Screen scraping and online scraping are both data extraction technologies, however the methods used to capture and retrieve information differ.

Screen scraping is the process of extracting visual information from a website or application’s user interface (UI). It collects text, pictures, and links as they appear on screen, frequently imitating human activities to acquire data. In contrast, web scraping retrieves data directly from a website’s HTML, XML, or JSON code, allowing access to hidden elements like metadata and API responses, rather than just what is visibly displayed.

The type of data collected also differs. Screen scraping captures onscreen elements without necessarily accessing the underlying website code. It retrieves text, graphics, and links according to how they appear in the interface. Web scraping, on the other hand, collects both structured and unstructured data, such as tables, product listings, database entries, photos, and multimedia material.

Automation also helps to separate the two strategies. Screen scraping uses automation to grab visual data, although it frequently needs interaction with online features such as forms and menus. Web scraping, on the other hand, automates data extraction without displaying the webpage, making it a more effective method of collecting vast amounts of data.

10 Practical Applications of Screen Scraping

Screen scraping is widely used across industries for data collection and analysis. Here are ten common use cases:

- E-Commerce Price Monitoring – Retailers use competition prices, discounts, and product availability to change their pricing strategy;

- Real Estate Market Analysis – To examine market trends, real estate experts compile information on property listings, pricing, and locations;

- Social Media Sentiment Analysis – To determine how the general public feels about businesses, marketers gather user reviews, comments, and trends from social media sites;

- Job Market Research – HR teams scrape job postings to analyze hiring trends, salary data, and in-demand skills;

- News Aggregation – Media platforms compile news articles from multiple sources to create customized news feeds;

- Financial Data Analysis – Investors and analysts monitor financial news, stock movements, and economic events for informed decision-making;

- Competitive Pricing in Hospitality – Hotels and airlines track competitor pricing to adjust room rates and ticket costs dynamically;

- Product Reviews and Ratings – Brands collect consumer feedback from review sites to enhance product development;

- Weather Data Collection – Meteorologists scrape weather websites for historical data and climate pattern analysis;

- Healthcare Provider Comparisons – Patients and healthcare organizations compare ratings, services, and feedback to make better-informed choices.

Screen scraping helps businesses and individuals gain valuable insights by automating data collection from various sources.

Conclusion

Screen scraping extracts and repurposes onscreen data to automate collection, integrate information, and improve efficiency in areas like finance, market research, and data migration. While useful, it comes with challenges such as maintenance, security risks, and ethical concerns. Organizations must ensure compliance, protect user privacy, and implement safeguards like rate limiting, CAPTCHA, and fraud detection to prevent unauthorized data extraction while maintaining usability.

Posted in blog, Web Applications

Alex Carter

Alex Carter is a cybersecurity enthusiast and tech writer with a passion for online privacy, website performance, and digital security. With years of experience in web monitoring and threat prevention, Alex simplifies complex topics to help businesses and developers safeguard their online presence. When not exploring the latest in cybersecurity, Alex enjoys testing new tech tools and sharing insights on best practices for a secure web.