To deploy an HBase cluster with Whirr, proper configuration is key for a smooth setup. This includes defining cluster settings, launching instances, and managing connections. Whirr simplifies provisioning, especially on cloud platforms like AWS EC2. A solid grasp of setup, security, and management ensures the cluster runs efficiently and scales effectively.

Hardware Requirements for HBase Deployment

When setting up an HBase cluster, selecting the appropriate hardware is critical for performance and scalability. HBase operates on commodity hardware, however, this does not always indicate low-end setups. Key considerations include:

- CPU: A minimum of 8-12 cores per node is recommended. More cores help manage concurrent read/write operations;

- Memory (RAM): 24-32 GB RAM per node is ideal for handling RegionServers and caching;

- Storage: SSDs should be used for read/write tasks that need high performance. A decent solution consists of at least four drives per node, with RAID configurations that balance performance and redundancy;

- Network: High-bandwidth networking (10 Gbps or above) is suggested to avoid bottlenecks in large-scale deployments.

For AWS-based deployments, C5.2xlarge or M5.xlarge instances provide a good balance between performance and cost.

Cluster Architecture and Role Distribution

A distributed HBase setup consists of several key components that must be correctly allocated across nodes for optimal performance:

- HBase Master: Oversees region assignments and cluster health. It should run on dedicated hardware for reliability;

- ZooKeeper: Manages distributed coordination. A minimum of 3 ZooKeeper nodes is required for high availability;

- RegionServers: These handle data storage and processing. They should be colocated with HDFS DataNodes for data locality optimization;

- HDFS DataNodes: Store the actual data files. Each node should have sufficient storage and be configured for data replication (default: 3x).

Recommendation: Avoid colocating RegionServers with Hadoop TaskTrackers in low-latency applications since MapReduce can degrade HBase real-time performance.

The proper allocation of resources in a distributed HBase architecture is critical for sustaining performance and scalability. Similarly, PHP performance testing helps discover bottlenecks in web applications, guaranteeing effective execution and resource use.

Optimizing HBase Configuration for Performance

To ensure smooth operations, HBase requires fine-tuning of key configuration parameters:

Memory Allocation

Set Java heap size for RegionServers to at least 8-16 GB:

-Xmx8g -Xms8g -Xmn128m -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=70

Enable MemStore-Local Allocation Buffer (MSLAB) to prevent heap fragmentation:

<property>

<name>hbase.hregion.memstore.mslab.enabled</name>

<value>true</value>

</property>

HDFS and RegionServer Tuning

Increase the maximum number of open file descriptors to 32768 to prevent performance bottlenecks:

hbaseuser nofile 32768

hadoopuser nofile 32768

Adjust HBase region sizes for better balancing:

<property>

<name>hbase.hregion.max.filesize</name>

<value>10737418240</value> <!– 10 GB –>

</property>

Avoiding Frequent Garbage Collection Pauses

Set CMS Garbage Collector to prevent long GC pauses:

-XX:+UseConcMarkSweepGC

Launching an HBase Cluster

To start an HBase cluster using Whirr, run:

$ whirr launch-cluster –config hbase-cluster.properties

During setup, status messages show on the console, providing real-time information on the cluster’s starting process. Debug logs are stored in a file named whirr.log in the working directory. Once the cluster is fully operational, the console will display a message containing the URL to the HBase web UI.

Deploying on AWS EC2

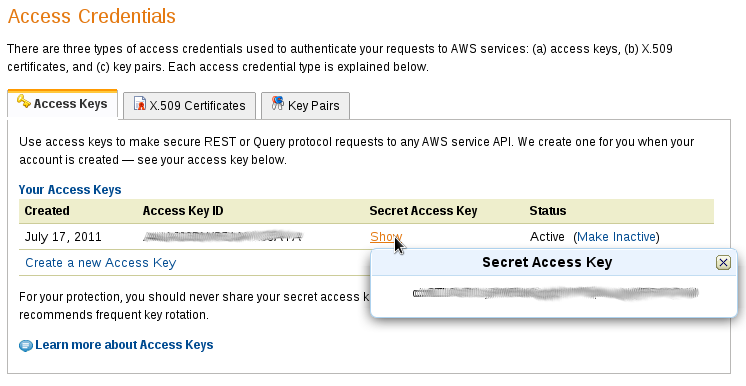

Whirr enables customers to create HBase clusters on AWS EC2. To use this functionality, you must specify your AWS credentials as environment variables:

export AWS_ACCESS_KEY_ID=<your-access-key>

export AWS_SECRET_ACCESS_KEY=<your-secret-key>

Once your credentials are set, define the cluster configuration in a file, such as hbase_aws_setup:

whirr.cluster-name=my-hbase-cluster

whirr.instance-templates=1 zookeeper+hadoop-namenode+hadoop-jobtracker+hbase-master,

5 hadoop-datanode+hadoop-tasktracker+hbase-regionserver

hbase-site.dfs.replication=3

whirr.zookeeper.install-function=install_hbase_zookeeper

whirr.zookeeper.configure-function=configure_hbase_zookeeper

whirr.hadoop.install-function=install_hbase_hadoop

whirr.hadoop.configure-function=configure_hbase_hadoop

whirr.hbase.install-function=install_hbase_services

whirr.hbase.configure-function=configure_hbase_services

whirr.provider=aws-ec2

whirr.identity=${env:AWS_ACCESS_KEY_ID}

whirr.credential=${env:AWS_SECRET_ACCESS_KEY}

whirr.hardware-id=c5.2xlarge

whirr.image-id=us-west-2/ami-07ebfd5b3428b6f4d

whirr.location-id=us-west-2

Run the following command to launch the cluster using this configuration:

bin/whirr launch-cluster –config hbase_aws_setup

Once the cluster is running, you can verify the instances and their roles by listing the active nodes:

bin/whirr list-cluster –config hbase_aws_setup

The output of this command will display instance IDs, AMI details, public and private IP addresses, and assigned roles (e.g., zookeeper, hadoop-namenode, hbase-master).

Setting Up an SSH Proxy

To maintain network security, all client traffic is routed via the master node using an SSH tunnel. This configuration acts as a SOCKS proxy on port 6666, resulting in a secure communication channel. When the cluster starts, a proxy script is written and saved in the ~/.whirr/<cluster-name> directory.

To start the proxy, execute the following command:

$ . ~/.whirr/myhbasecluster/hbase-secure-proxy.sh

To stop the proxy, press Ctrl-C in the terminal where the proxy is running.

Configuring the HBase Client

Whirr creates a hbase-site.xml file in the ~/.whirr/<cluster-name> directory after successfully launching the cluster. This file must be used to setup the local HBase client so that it can connect with the cluster correctly.

Updating HBase Configuration

To configure the local HBase client, copy the hbase-site.xml file to the following directory:

$ cp ~/.whirr/myhbasecluster/hbase-site.xml /etc/hbase/conf/

On Ubuntu, Debian, or SLES systems, update the configuration alternatives by running:

$ sudo update-alternatives –install /etc/hbase/conf hbase-conf /etc/hbase/conf 50

$ update-alternatives –display hbase-conf

On Red Hat systems, execute the following:

$ sudo alternatives –install /etc/hbase/conf hbase-conf /etc/hbase/conf 50

$ alternatives –display hbase-conf

After the configuration is adjusted, the local HBase client may connect to the cluster and interact with the database.

Running HBase Shell

With the cluster running and the client configured, you can now use the HBase shell to manage your data. Launch the HBase shell by running:

$ hbase shell

To verify that the connection is working, list all available tables:

hbase> list

Creating and Managing Tables

To create a new table in HBase, use the following command:

hbase> create ‘user_data’, ‘info’

In this example, ‘info’ represents the column family for the table.

To insert data into the table, run:

hbase> put ‘user_data’, ‘user1’, ‘info:name’, ‘John Doe’

To retrieve all data stored in the table, use:

hbase> scan ‘user_data’

This command will return all rows, columns, and values stored within the table.

Shutting Down the HBase Cluster

When you’re finished, use the cluster, properly shut it down to free up resources and reduce unnecessary costs. To terminate the cluster and free up any associated resources, run:

$ whirr destroy-cluster –config hbase-cluster.properties

If an SSH proxy was started earlier, make sure to stop it as well.

Destroying an AWS EC2-Based Cluster

For clusters deployed on AWS EC2, use the following command to terminate all instances and remove configurations:

bin/whirr destroy-cluster –config hbase_aws_setup

After performing this command, all data in the cluster will be permanently deleted.

Warning: Destroying the cluster will result in the loss of all stored data. Ensure that you have backed up any important information before shutting down the cluster.

Conclusion

Setting up an HBase cluster with Whirr requires configuring the environment, launching instances, and securing the system. Deploying on AWS EC2 adds steps like authentication and resource allocation. Once running, configuring the HBase client ensures smooth data management. Proper shutdown prevents wasted resources, while best practices maintain stability and performance.